Update Autumn 2025:

We have implemented gigapixel in our online collection. Check out ex: https://www.nasjonalmuseet.no/samlingen/objekt/NG.M.00841

How do we let you zoom from gallery view to a single brush‑stroke?

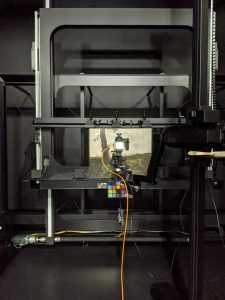

When our photo team wheels a two‑metre‑high steel easel into the studio, it looks more like engineering than art history. The frame—custom‑built with Trosterud Mekaniske and fitted with Siemens stepper motors—moves a painting a few millimetres at a time while a 400‑megapixel Hasselblad Multishot camera fires off exposures. For a modest‑sized canvas that means two hundred overlapping photos; for a Munch mural it can be well over a thousand. Back at the workstation, Stina stitches the shots into a gigapixel master file that tops half a gigabyte. Lovely, but far too big for the web—unless we cheat a little.

The trick is an open standard called IIIF (pronounced triple‑I‑F). Think of it as Google Maps for paintings: instead of sending you every pixel, our server sends only the tiles you can actually see. That means your phone pulls down a few hundred kilobytes, not 800 megabytes, and you still get to swoop straight into the canvas grain. The result feels like magic; it’s really just smart file prep, a fast image server and a lean JavaScript viewer working in concert.

What happens after the shutter clicks?

- Stitch & Slice – The master TIFF is saved in multiple resolutions, each broken into 256 × 256 pixel tiles. (The format is called pyramidal TIFF; picture a stack of ever‑smaller images.)

- Serve – An IIPImage server speaks the IIIF Image API. When the viewer asks for

/full/512,/0/default.jpg, the server builds that exact thumbnail on the fly—in under 50 ms. - View – OpenSeadragon lives in your browser tab. It keeps track of which tiles sit inside the viewport, fetches them in parallel and double‑buffers them so the image never tears during a fast pan.

With a 30 Mbps connection you’ll typically download less data than a single Instagram Reel, even if you spend minutes exploring the picture.

Technical deep‑dive (for the curious)

1 · File preparation

- Input → 16‑bit TIFF at native camera resolution (e.g. 23200 × 17400 px per shot)

- Processing → Affinity Photo scripting + custom Python to align & blend; PTGui for complex panoramas.

- Output →

BigTIFFwith 12 pyramid levels, tiled 256×256, LZW lossless compression.

2 · Server stack

NGINX (TLS + Brotli + cache‑headers)

↳ FastCGI → IIPImage 1.2 (IIIF v3 endpoint)

↳ Z FS on NVMe SSD pool (0.2 ms seek)

- Average render latency (full‑res tile, first hit): 41 ms

- Peak throughput (single node, 16 threads): 1 750 tiles/s

- Horizontal scaling: stateless; nodes register behind Azure Load Balancer.

3 · Viewer tuning (OpenSeadragon v4.0‑beta)

- Tile prefetch: up to 2 levels ahead while user drags/zooms.

- Memory budget: 120 tiles in RAM (~24 MB) before LRU eviction.

- Viewport smoothing: requestAnimationFrame with 60 fps target.

- Accessibility: keyboard panning, narrated zoom levels via ARIA‑live regions.

4 · Real‑world bandwidth

| Action | Tiles | Data | Transfer time @ 30 Mbps |

|---|---|---|---|

| Initial fit‑to‑screen | 9 | ~160 kB | 43 ms |

| Zoom 2× (HD monitor) | 16 | ~290 kB | 77 ms |

| Zoom 8× (brush‑stroke) | 25 | ~460 kB | 124 ms |

5 · Beyond RGB

IIIF isn’t limited to colour photos. We’re also able to publish:

- Infra‑red & X‑ray layers as separate IIIF services that can be toggled on/off.

- Depth maps captured with structured‑light 3‑D scanning, useful for conservation.

- Machine‑learning overlays that auto‑tag tiny motifs (think insects in Baroque still‑lifes).

Further reading

- IIIF Image API v3 – <iiif.io>

- IIPImage user guide – <iipimage.sourceforge.io>

- OpenSeadragon docs – <openseadragon.github.io>

For most visitors the only visible sign of all this is the thrill of spotting a stray brush hair embedded in the varnish—without ever noticing the clever plumbing that got the pixels there. Happy zooming!